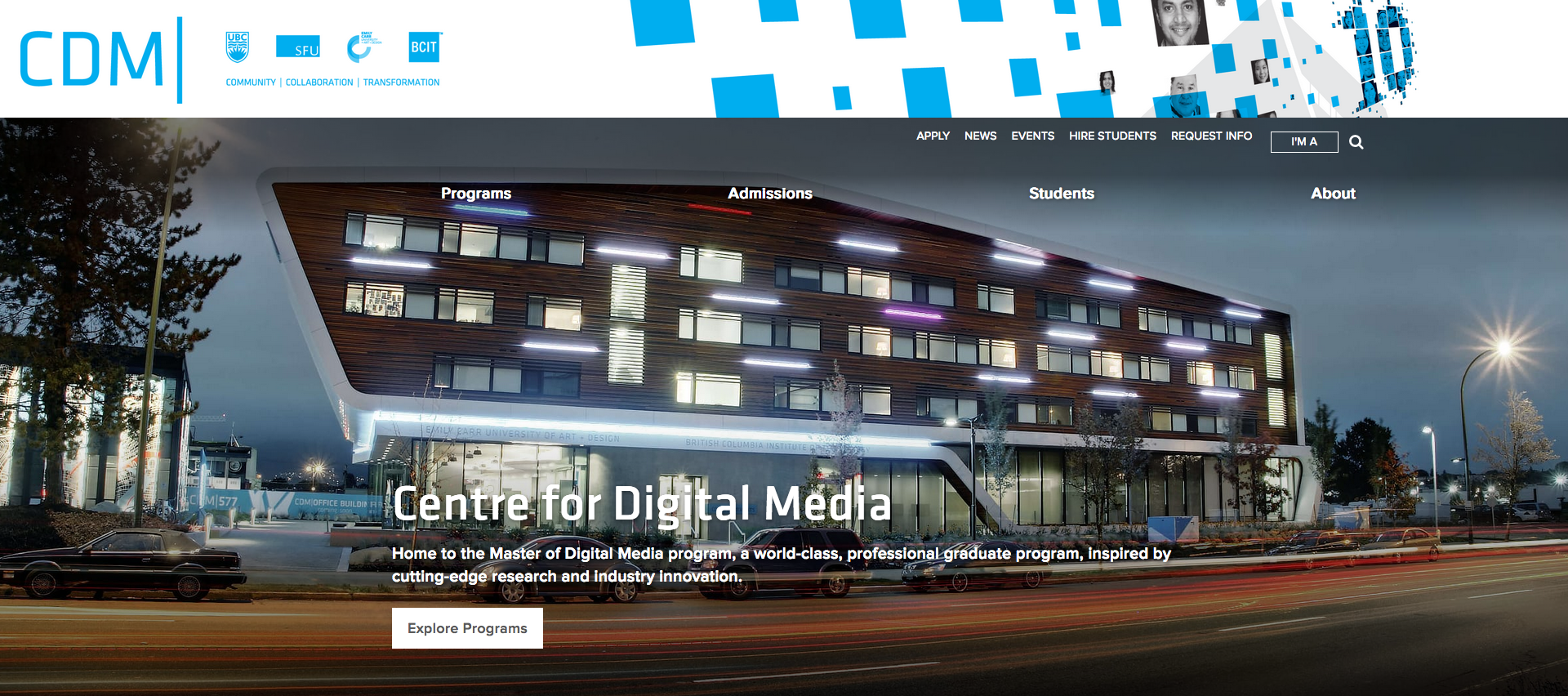

Fig 1. Robots.txt file

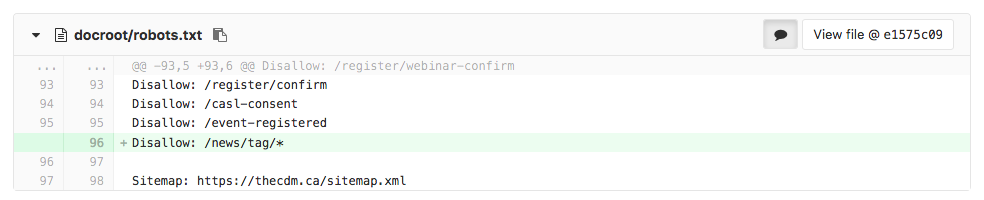

Fig 2. Hreflang sitemap

Fig 3. Homepage structured data

Centre for Digital Media

Jan 2018 - Present

I've been working as a Search Engine Optimization consultant at the Centre for Digital Media since January 2018. I'm responsible for defining and implementing new SEO strategies for the school's website (thecdm.ca). I carry out front-end & back-end optimizations and create engaging and SEO-ready content.

Crawl budget

Our website was built with Drupal and has been through a series of transitions in the past decade. This had lead to the accumulation of old, low-quality pages, and even inaccurate content. Our crawl demand was getting higher than needed and the increasing number of pages was preventing us from ranking well.

I focused on removing and redirecting old pages and directories to more recent pieces of content. The idea was to indicate to crawlers which sections could be removed from the index and which pages had to be crawled and indexed in priority.

Event pages are a good example of old, low-quality pages. The school hosts a number of events every year and those events are largely advertised on the website. Hundreds of event pages had been created since 2010 and none of them was providing useful information to our visitors. Having such a large number of low-quality pages was diluting our ranking potential, thus I chose to redirect them to our main event landing page to remove them from the crawlers' index.

Managing redirections

Implementing the right redirections or the right status codes can be quite daunting when hundreds (if not thousands) of pages have to be processed.

- I added manual redirections to content pages - like articles or news posts - as those pages had to be processed on a case-by-case basis.

- I used apache regex for sets of pages that needed the same redirect rule. Example for our event pages:RewriteCond %{REQUEST_URI} ^/events/201[1234567](.+) [NC]RewriteRule .* https://%{HTTP_HOST}/calendar/events [R=301,L]

Duplicate content

Getting rid of duplicate content was also a challenge. The most interesting issue I had was with our old mobile site, which is hosted on a subdomain (m.thecdm.ca). When our "responsive design transition" occurred, a redirect rule to our main website (thecdm.ca) was implemented since the mobile version of our site was no longer needed. However, the redirect rule stopped working when our SSL certificate expired. The entire "m" subdomain was returning a 200 status code, making it fully accessible to bots and visitors...

A few more things I implemented

- Hreflang tags for our program page. I chose the sitemap option to implement those tags because of a back-end limitation in our system.

- Pagination on our list pages to help reduce our crawl demand.

- Configuration of the right status code on certain pages returning a 200 instead of a 404 (caused by a limitation in Drupal).